#AIRFLOW KUBERNETES OPERATOR HOW TO#

However, this could be a disadvantage depending on the latency needs, since a task takes longer to start using the Kubernetes Executor, since it now includes the Pod startup time.You can use Apache Airflow DAG operators in any cloud provider, not only GKE.Īirflow-on-kubernetes-part-1-a-different-kind-of-operator as like as Airflow Kubernetes Operator articles provide basic examples how to use DAG's.Īlso Explore Airflow KubernetesExecutor on AWS and kops article provides good explanation, with an example on how to use airflow-dags and airflow-logs volume on AWS.Įxample: from airflow.operators.

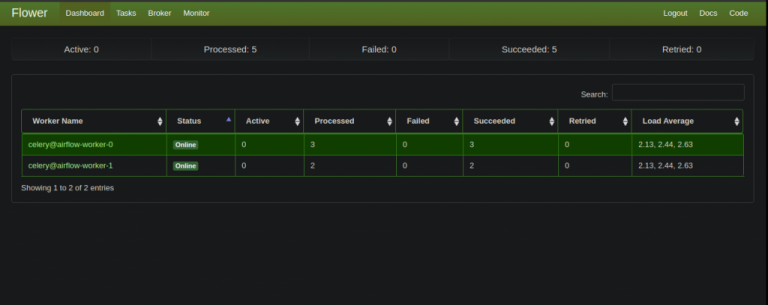

The Kubernetes Executor has an advantage over the Celery Executor in that Pods are only spun up when required for task execution compared to the Celery Executor where the workers are statically configured and are running all the time, regardless of workloads. The Kubernetes pod operator works all good.Passing environmental variables via the pod operator works all good. This is being done to execute one of our application process in a kubernetes pod. One example of an Airflow deployment running on a distributed set of five nodes in a Kubernetes cluster is shown below. I am using Apache Airflow where in one of our DAGs task we are using Kubernetes Pod Operator. In contrast to the Celery Executor, the Kubernetes Executor does not require additional components such as Redis and Flower, but does require the Kubernetes infrastructure. passing -serviceaccount in airflow kubernetes pod operator. I am able to use the API from the VM where I port forward the web server, using the endpoi. The worker pod then runs the task, reports the result, and terminates. In your specific use case, it could looks like the following: import datetime from airflow import models from import kubernetespodoperator from import sendemail from kubernetes. I currently have airflow running in a Kubernetes cluster in Azure using the helm chart for Apache airflow. When a DAG submits a task, the KubernetesExecutor requests a worker pod from the Kubernetes API. Prerequisites Version > 1.9 of Kubernetes. '''Example DAG using KubernetesPodOperator.''' import datetime from airflow import models from import Secret from .operators.kubernetespod import ( KubernetesPodOperator, ) from kubernetes. Workflows are defined as code, with tasks that can be run on a variety of platforms, including Hadoop, Spark, and Kubernetes itself. All classes for this provider package are in python package. As per today,there is an ongoing issue with Airflow 1.10.2,the issue reported description is : Related to this, when we manually fail a task, the DAG task stops running, but the Pod in the DAG does not get killed and. Backward compatibility of the APIs is not guaranteed for alpha releases. In addition, The timeout is only enforced for scheduled DagRuns, and only once the number of active DagRuns maxactiveruns.

By the way, take a look at the persistent volumes associated to our MiniKube cluster and check that you get the same airflow- volumes as we are going to interact with them. The KubernetesExecutor requires a non-sqlite database in the backend, but there are no external brokers or persistent workers needed.įor these reasons, we recommend the KubernetesExecutor for deployments have long periods of dormancy between DAG execution. The Airflow Operator is still under active development and has not been extensively tested in production environment. At this time you have a fully functionnal instance of Apache Airflow with Kubernetes Executor. The KubernetesExecutor runs as a process in the Scheduler that only requires access to the Kubernetes API (it does not need to run inside of a Kubernetes cluster). Here is an example of a task with both features:

This will replace the default pod_template_file named in the airflow.cfg and then override that template using the pod_override. The KubernetesPodOperator enables task-level resource configuration and is optimal for custom Python dependencies that are not available through the public PyPI. You can also create custom pod_template_file on a per-task basis so that you can recycle the same base values between multiple tasks. In comparison, Google Kubernetes Engine operators run Kubernetes pods in a. The Kubernetes executor will create a new pod for every task instance.Įxample kubernetes files are available at scripts/in_container/kubernetes/app/, ) KubernetesPodOperator launches Kubernetes pods in your environments cluster. KubernetesPodOperator launches a Kubernetes pod that runs a container as specified in the operators arguments. The kubernetes executor is introduced in Apache Airflow 1.10.0.

0 kommentar(er)

0 kommentar(er)